Amazon Echo, and other ‘smartspeaker’ products such as Google Home and Apple Homepod, are marketed as being suited to multi-party multi-activity settings like the home. What this means is that these products are pitched as being suited to situations where two ore more people are doing various activities, and where they can interact with the smartspeaker through its voice interface with ease. In this work, we wanted to examine exactly how this interaction unfolds naturally.

We (i.e. me, my supervisors Joel Fischer and Sarah Sharples, and a colleague, Stuart Reeves) have published our findings as a paper, which is now available to download. We will present the findings at CHI 2018, the ACM conference for Human-Computer Interaction. As a nice surprise, this paper also has won a Best Paper award at CHI, awarded to the top 1% of submissions.

This blog post should give you a flavour of what we found, however if you’re interested in looking at some great transcripts of interaction with Alexa, I urge you to have a look at a Stuart’s more detailed Medium post here, or alternatively read the paper.

Watch my presentation of the work at CHI 2018

The work in this paper stems from how people embed the use of the device in the ongoing activity in the home. During and following Echo requests, we noticed that often other conversationalists will sit in silence (we term this ‘mutually producing silence’). In other words, people pay attention or in other words, they oreint to the interaction with the Echo. This orientation means that as trouble with an interaction occurs (and by trouble, we mean anything from the device not responding to it responding incorrectly), people can work together to identify the problem and resolve it.

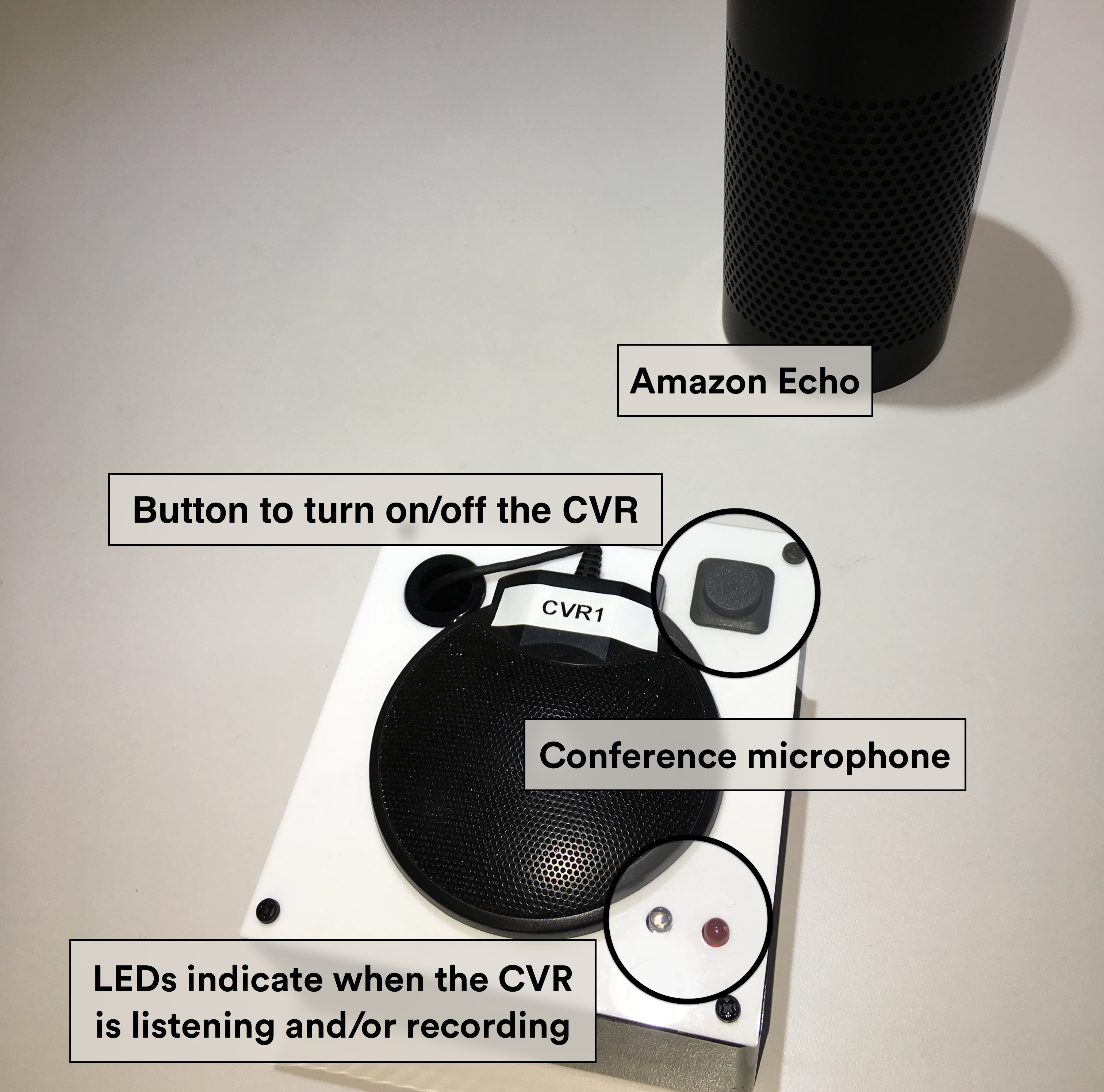

Firstly, to examine how people use a voice-based smartspeaker, we needed to capture their interactions in a non-intrusive way, and in a way where the interactions unfolded ‘naturally’ (i.e. there wasn’t a researcher there asking them to use the device). To do this, we recruited families to live with an Amazon Echo for a month or so, and also provided them with a separate ‘Conditional Voice Recorder’ (CVR) that constantly listens for the word ‘Alexa’, much like the Amazon Echo does. When the CVR detects the word ‘Alexa’, it saves the last minute of audio from a temporary buffer and continues recording for one further minute. If ‘Alexa’ is detected in this trailing minute, it will record one further minute from this point onwards. This allows us to get some contextualising information of what leads up to Amazon Echo use, and how people deal with the responses from the device. You can grab the source code to the CVR from GitHub.

The Conditional Voice Recorder (CVR)

We listened to the recordings, indexed them, and began setting about working out how people made use of Echo in conversation Below I’ve summarised three interlinked findings from our study (although there are more in the paper).

Our first key takeaway is that the use of the Echo gets embedded amongst the everyday interactions in the home, for example: during parties, meals, while cooking, and so on. This is a crucial finding as it shows how sequences of interaction with the Echo are often not delivered as a continuous stream but get broken up amongst talk with other people and other activities in the home. This creates complex situations for voice-controlled devices like the Echo, as it means more than one person may be dealing with one-or-more interactions with and around the Echo.

A remarkable observation of how finely people embed this interaction is seen, for example, in how people use the gap between the wake word and the request (the wake word is a phrase or word used to activate the Echo from its ‘inactive’ state so that you can make a request, with the Echo the wake word is ‘Alexa’ by default). In other words, just after someone says ‘Alexa’, somebody could possibly say something in relation to the request that is about to be made. This brings to the fore that interactions with the device are not discrete continuous blocks, but actually are sequentially interweaved within the activities in the home. In our data, such interjections could be approvals, acknowledgements, rejections, or suggestions.

In other cases, a bit more coarsely, we also saw how members talk to each other between subsequent requests to the Echo and use gaps between requests to talk to each other about other topics. This all comes together to show how, despite being predominantly single-user devices (after all, it can only deal with one person talking at a time), interactions with the Echo become enmeshed in some of the goings-on of the multi-activity home.

A second key finding we noticed is that when things go wrong, people try and try again to resolve the problem and get the desired outcome. We also recorded how participants have discussions with each other to identify what went wrong with a particular request (this is not always obvious), and includes other users proposing possible solutions. Sometimes people perceive the problem to be that the Echo ‘doesn’t understand the person who made the request’, and so another person might try the same request. Alternatively, people may use a different pronunciation (or ‘prosody’) to get the request to work, or even go as far as to use different words for the same purpose. All of these alterations in requests, both done by the original user and by others who are present, show how people do work to get the desired outcome of an interaction even if the initial request failed.

This stems from how people embed the use of the device in the ongoing activity in the home (finding 1, above). During and following Echo requests, we noticed that often other conversationalists will sit in silence (we term this ‘mutually producing silence’) while a request is made and following the request for a few seconds. It seems from our data that people pay attention to interactions with the Echo (in our work, we remark that they orient to the interaction with the Echo). This orientation means that as trouble with an interaction occurs (and by trouble, we mean anything from the device not responding to it responding and doing the wrong thing), people can work together to identify the problem and resolve it by using their observation and reasoning of what the trouble was.

The final point I wanted to discuss here is how the control of the device differs from perhaps what we are accustomed to when it comes to technology use in social gatherings. Typically, devices have a user and only that person can make use of the device (e.g. think of a laptop sitting in front of one person, or a television remote in the hand of one person). If another person wants to make use of the device, they must ‘negotiate’ with the person controlling access currently to handover control. Our data showed that voice-controlled devices are different though — anyone can interact with them just by talking to the device (you currently can’t stop someone interacting with a device such as the Echo).

This shifts the control of the device from a physical ‘who is holding the token’ (i.e. the television remote, or the computer itself) to entirely a socially managed construct: the control of interactions become entirely regulated through the ‘politics of the home’. This means that other users’ interactions with the device can only be managed through the social order of the home, and not by, for example, taking the remote. This feature of interaction with the Echo can also be employed for fun too as it also allows for competition between users as they try to get the Echo to do something, with this resting on the notion that the Echo primarily hears the loudest speaker!

This finding then rests on how the interaction with the Echo is embedded in interactions in the home (finding 1) and goes on to support and allow other co-present people to collaboratively involve themselves in each other’s interactions with the device, including to support potential resolution of troublesome interactions (finding 2).

We actually make a number of other points in the paper, such as a discussion of how these interfaces are not ‘conversational’ and that designers should consider interactions as ‘request/response’ instead, and of the value of our conversation analytic approach to studying interactions with the Echo. You can find more in Stuart’s Medium post and in the paper.

Originally posted at https://www.porcheron.uk/blog/2018/02/12/examining-amazon-echo-use-in-the-home/

https://www.porcheron.uk/blog/2018/02/12/examining-amazon-echo-use-in-the-home/