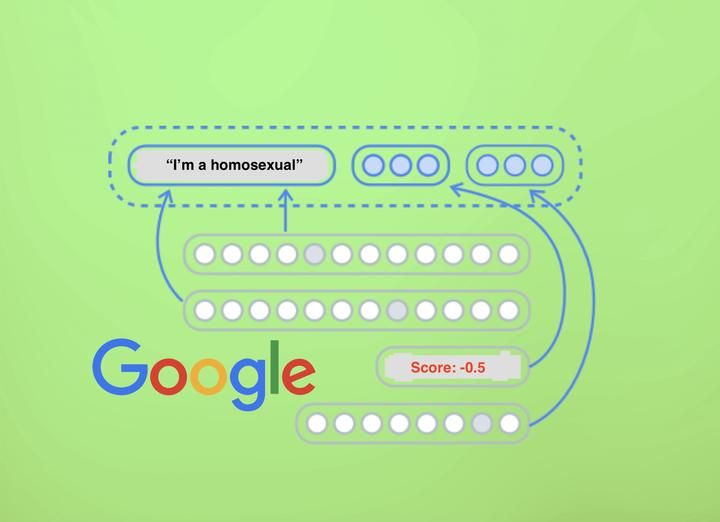

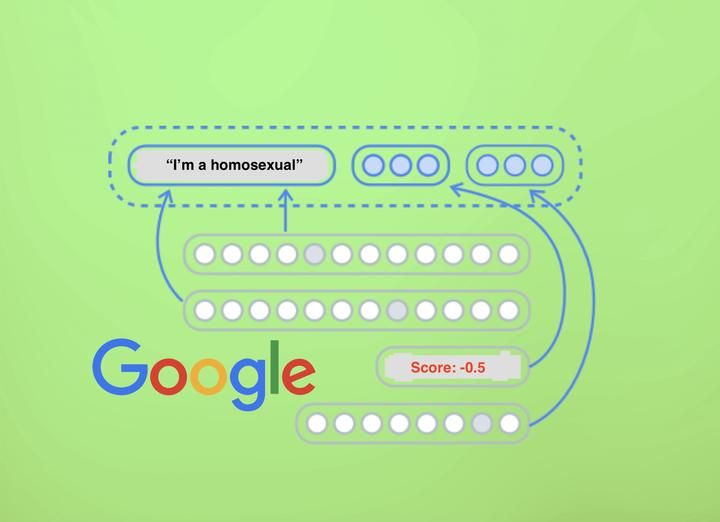

Recently, another attempt at creating some sort of “generalish AI” that was meant to be able to understand society has ended badly. This time it was Google, who built a tool that was one big large sentiment analysis tool, which scraped the web and gave a positive/negative score to different phrases based on what it found. Unsurprisingly, phrases such as “I’m a homosexual” came out negative, and there was outrage as bloggers around the world accused Google of some act of neglect in building a homophobic algorithm or some other truly evil sin. The reality, however, is that it is humans are not some uniform dogooder collective. They’re a bunch of opinionated and offensive windbags.

Recently, another attempt at creating some sort of “generalish AI” that was meant to be able to understand society has ended badly. This time it was Google, who built a tool that was one big large sentiment analysis tool, which scraped the web and gave a positive/negative score to different phrases based on what it found. Unsurprisingly, phrases such as “I’m a homosexual” came out negative, and there was outrage as bloggers around the world accused Google of some act of neglect in building a homophobic algorithm or some other truly evil sin. The reality, however, is that it is humans are not some uniform dogooder collective. They’re a bunch of opinionated and offensive windbags.

Image from Motherboard

Now, such a story of rogue AI is instinctively familiar to many. Last year, Microsoft ran afoul of humans-being-douchebags with its AI Tay chatbot, which turned itself into a Nazi in under one day. They naively built a chatbot that learnt from its communications with people. Microsoft chose to set this loose on Twitter, but no-one sat down and thought about any crucial problems with this plan. Here’s the kicker they missed: humans aren’t bias-free virtuous beings. In fact, humans, and humanity, function entirely on the foundation of bias.

In both cases, with Tay and the Google Sentiment Analyzer, the software algorithms learned stuff that the company and its developers didn’t intend it to learn. Did the developers really expect something that learns from humanity to only learn information that shares the same viewpoint of what is right and wrong as the developers? The world is full of people with shitty opinions, and they think your opions are shitty too.

Image from The Hacker News

This actually boils down to something fundamental about humans: bias. Back to the Google example, many people are fine with homosexuality, but others think anyone gay should be stoned to death, and then spend eternity in hell. If you want to build an AI that acts as a ‘model citizen’ by your own standard, you first need to realise that each person’s biases and outlook took years of being cultivated by their religion, family, friends, media, teachers, and so on. People learn good biases socially through living. But, and this is kicker: the definition of what a “good bias” is unique to you — each person defines it based on their own experiences and learnings.

Many people think it is right to stone gays, many people don’t. Your decision on whether that is right or not is based on your biases that you’ve learned. A machine that has ‘been alive’ for 24 hours, that started off bias-free, has to learn its biases, and it’s learning on the ultimate hellhole of humanity: the World Wide Web. This is the wild-west, where prejudice and hate is rife. Are you really surprised that an algorithm that was learning from Internet communications turned out prejudice and hateful? I mean, really?

So, why did the message that going crazy and learning from the WWW in your machine learning algorithm without guards will end with dire consequences not sink into the developers? Everyone — even engineers — will know that the world is full of opinionated and biased people, and the Internet provides the greatest mouthpiece to the most vocal and vile. Perhaps it was just an eagerness to release some new software, or perhaps it was just an oversight. But either way, if you want to build an AI that starts off bias-free and then learns from humanity, don’t be surprised when it turns into a Nazi. If you want an AI that acts and thinks like a stereotypical liberal technology worker (whether such a thing exists is a whole other debate), then maybe you need to develop mechanisms for machines to understand human biases before hand, just like you teach your child to know what is right and wrong so they can evaluate the world themselves.

Originally posted at https://www.porcheron.uk/blog/the-challenge-with-ai-humans-have-biases

https://www.porcheron.uk/blog/the-challenge-with-ai-humans-have-biases