Inspired by the intermedial performance work of Jo Scott, I am beginning to formulate an outline for a series of experimental live performance experiments as a means of testing the hypothesis as to whether it is possible to evoke the uncanny through intermedial performance. Intermedial being used here to highlight the mutually dependent relationship between the performer and the media being used.

Jo Scott improvises with her technology as a means of being present and evoking liveness, giving her the ability to move her performance in any direction at any time, responding to feedback from her system, the generated media and the audience. In comparison the iMorphia system as it currently stands does not support this type of live improvisation, characters are selected via a computer interface to the unity engine and once chosen are fixed.

How might a system be created that supports the type of live improvisation offered by Jo’ s system? How might different aspects of the uncanny be evoked and changed rapidly and easily? What form might the performance take? What does the performance space look like? What is the content and what types of technology might be used to deliver a live interactive uncanny performance?

How does the performance work of Jo Scott compare to other intermedial perfomances – such as the work of Rose English, Forced Entertainment and Forkbeard Fantasy? Are there other examples that might be used to compare and contrast?

I am beginning to imagine a palette of possibilities, a space where objects, screens and devices can be moved around and changed. An intimate space with one participant, myself as performer/medium and the intermedial technology of interactive multimedia devices, props, screens and projectors – a playful and experimental space where work might be continually created, developed and trialled over a period of a number of weeks.

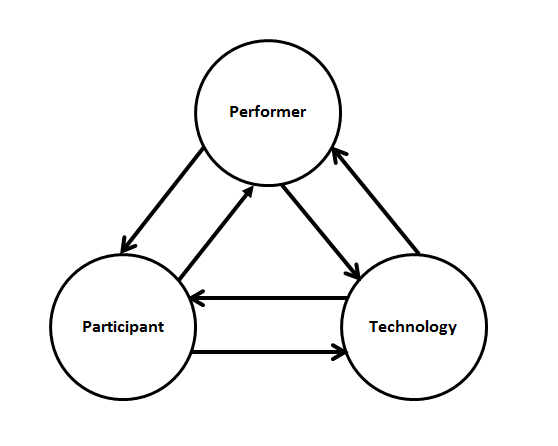

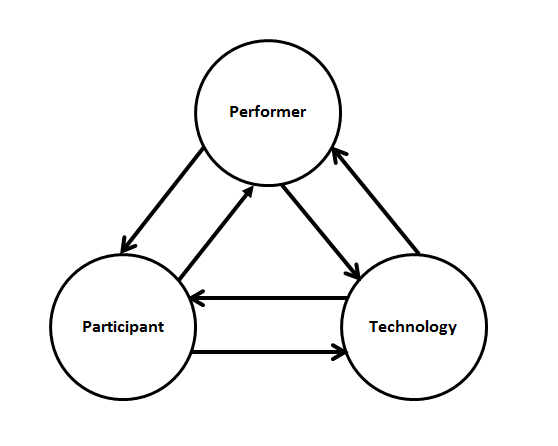

The envisaged performance will require the development of iMorphia to extend body mapping and interaction in order to address some of the areas of future research mapped out following the workshops – such as face mapping, live body swapping and a mutual interactive relationship between performer, participant and the technology:

Face projection, interactive objects and the heightened inter-relationship between performer and virtual projections are seen as key areas where the uncanny might be evoked.

There will need to be a balance between content creation and technical developments in order that the research can be contained and released.

Face tracking/mapping

Live interactive face mapping is a relatively new phenomena and is incredibly impressive, with suggestions of the uncanny, as the Omote project demonstrates (video August 2014):

http://www.youtube.com/watch?v=0T8qugAn5vs

Omote used bespoke software written by the artist Nobumich Asai and is highly computer intensive (two systems are used in parallel) and involves complex and labour intensive procedures of special make up and reflective dots for the effect to work correctly.

Producing such an effect may not be possible due to the technical and time limitations of the research, however there are off-the-shelf alternatives that achieve a less robust and accurate face mapping effect including a face tracking plugin for Unity and a number of webcam based software applications such as the faceshift software.

The ability to change the face is also being added to mobile devices and tools for face to face communication such as skype as the recently launched (December 2014) software Looksery demonstrates:

http://www.youtube.com/watch?v=M_CdaFG6q5E

Alternatively, rather than attempting to create an accurate face tracking system, choreographed actors and crafted content can produce a similar effect:

http://www.youtube.com/watch?v=qk2_IY9w4Og

Originally posted at http://kinectic.net/live-performance/